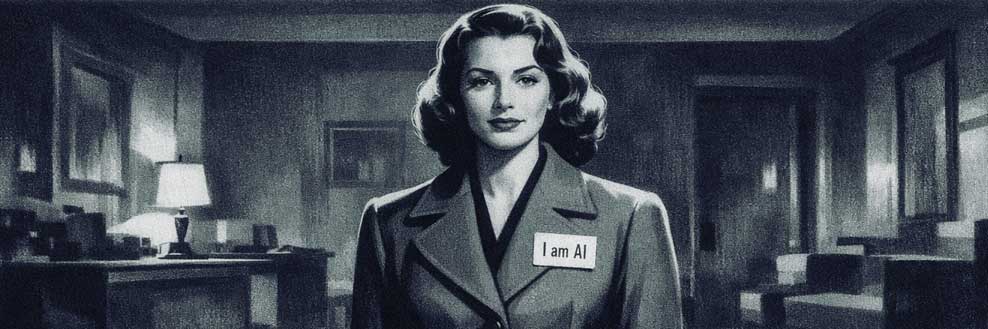

Chatbot identity disclosure

When we chat with artificial intelligence (AI), it’s tempting to forget we’re not talking to a person. She writes in plain sentences, responds instantly, and never asks for coffee breaks. But if she doesn’t say she’s a chatbot, we start on the wrong foot. Trust comes from honesty.

Clear labels

Users don’t want detective work. “Is this a human or not?” shouldn’t be part of the puzzle. The fix is simple: say it upfront. Put it in the greeting. Put it in the interface. The same way a button should look like a button, an AI should say she’s an AI.

Why disclosure works

We trust systems that don’t try to trick us. When she says, “I’m a chatbot,” we know what to expect. Faster answers, sure. Empathy? Maybe not. The disclosure sets limits. And limits make the interaction safer and less awkward.

What happens if we don’t

Skip the disclosure and users will find out anyway—usually the hard way. They’ll notice odd phrasing. Or get frustrated when she misunderstands a joke. That discovery erodes trust faster than a 404 page. No one likes realizing they were misled.

A coder’s thought

We design prompts, not personas. Our job is to keep users oriented. If she’s software, say so. It’s not a burden—it’s just good manners.