Explainable AI

We trust systems more when we can see how they think. Same with artificial intelligence (AI). She makes predictions that feel magical until we ask, “Why that answer?” That’s where explainable AI comes in.

Interpretability

Interpretability means turning her hidden math into something we can read. Not perfect truth, but useful hints. It’s like opening a black box just enough to see which gears are moving.

SHAP

SHAP (SHapley Additive exPlanations) borrows from game theory. Think of every input—age, zip code, credit score—as a “player” in her game. SHAP shows how much each player contributed to the final call. It’s tidy, consistent, and fairly slow. But we get a ranked list we can act on.

LIME

LIME (Local Interpretable Model-agnostic Explanations) goes smaller. She builds a simple stand-in model around one prediction. That lets us see what tipped the scales for that single case. Faster, less precise, but handy when we only care about the “why” behind one output.

Other methods

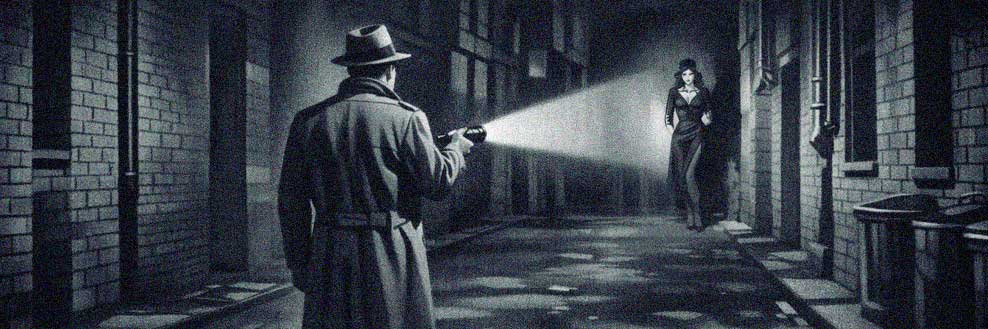

There are heatmaps that highlight pixels she noticed. Decision trees that mimic her choices. Partial dependence plots that say, “If we nudge this input, here’s how she reacts.” Each method is a flashlight in the dark—narrow but useful.

Our takeaway

We don’t need every gear to trust her. We just need enough to explain choices to a boss, a regulator, or ourselves. The trick is to pick the right tool for the right moment.

We’re coders. We know black boxes can’t stay black forever. If she’s going to work with us, we’d better see how she thinks—at least in outline.